(tl;dr: this started out as a short post about how all of journalism can benefit from learning to code. It is now a massive rant that maybe I’ll split up later. It covers:

- A quote by Seymour Hersh

- Two of my own projects as case studies:

- SOPA Opera: Using programming to create greater transparency on a single political issue

- Dollars for Docs: Using programming to drive a nationwide investigation

- How new, important stories can come from “old” ones

- A practical roadmap for non-programmers on where to start, with a list of resources and things to download

- A short list of inspirational ex-non-programmers

This post is inspired by a recent discussion on the NICAR (National Institute of Computer Assisted Reporting) mailing list, in which a journalism professor asked how her students should position themselves for a newspaper’s web developer job. The answer I suggested was: have them learn programming and have them publish projects online, on their own, that they can later show an employer.

But I’m becoming more convinced that programming – a decent grasp of it, not make-the-next-Facebook level – is an essential skill for all journalists, even ones that never intend to produce a webpage in their career. And for students, or any aspiring journalist, I think I can make the case that programming is absolutely the most important skill to learn in school (along with honing your interviewing, research and writing skills at the school paper/radio/TV station) if you want to improve your chances for a serious journalism career.

Hersh and Bamford

A few years ago, I attended a panel on investigative reporting that featured Seymour Hersh – the Pulitzer Prize-winning reporter who exposed the My Lai massacre – and James Bamford, a former Navy intelligence analyst who is well-respected for writing books that managed to penetrate the workings of even the super-secret NSA (affectionately known as the No Such Agency).

Seymour Hersh

The discussion turned to the use of the Freedom of Information Act, a law that reporters wield to get sensitive, unpublished documents from the federal government. Given that the NSA isn’t known for being chatty, Bamford explained how his stories were put together through exhaustive uses of FOIA.

When an audience member asked Hersh how often he used FOIA, his response – and I’m quoting from memory here – was:

“Why the fuck would I FOIA documents?”

I can’t read Hersh’s mind, but I’m guessing that he wasn’t wholesale dismissing the importance of FOIA, which has been essential in countless investigative stories.

He probably meant that: He’s Seymour Hersh. He exposed the My Lai massacre. He’s a regular contributor for the New Yorker. The kind of stories he writes for the New Yorker involves the type of people who wouldn’t be caught dead making a statement that would ever be reprinted on a document subject to a FOIA request.

And even if they were FOIAble, those requests take time (sometimes many years) and involve countless lawyers and legal wrangling. In the meantime, he’s up to his eyeballs in secret officials who, for some reason or other, are eager to spill their secrets to him, because he’s Seymour Hersh.

So, why the fuck would he FOIA documents?

Bamford doesn’t have quite that brand power and his targets are likely more reticent. But he’s learned – possibly through his Navy days – that there are plenty of important secrets in the stacks of documents that have been deemed fit for public consumption. It’s not always obvious, even among intelligence officials, to see how a mass of innocuous information inadvertently reveals big secrets.

So, back to the subject of aspiring journalists: to them, the already-employed journalists are the Seymour Hershes. These journalists have established themselves and their beat, which they can focus full-time on because they’re earning a salary to do so. Their phone contains the cell numbers of all the important officials who won’t ignore their 9 p.m. Sunday night call. When they write something, a large number of trees, barrels of ink, and/or corporate-purchased bandwidth are readily expended to make it known.

On the other end of the spectrum, the aspiring journalists are the Bamfords. Between work shifts, they have the same right as Joe Public to attend meetings and leave inquiries with contact@yourcity.gov. But for them, even the local police department might as well be the NSA. They aren’t going to be privy to hush-hush phone calls or let past the murder scene tape.

If this is your current vantage point, even if you intend to be a Hersh-style reporter, you’re going to have to Bamford your way into a field that has an increasing amount of noise and a corresponding shrinkage in paid, established positions.

Given this situation, I can think of no better strategy than to learn programming. This is a skill that not only makes more efficient every other journalism skill (writing, researching, publishing) but can, like Bamford’s relentless FOIAs, reveal stories that non-programming journalists will never be able to do, and in an unfortunate number of cases, even conceive of.

Learning the Hard Way

Zed Shaw, who isn’t a journalist but is renowned for both his code contributions and his widely-read (and free!) how-to-program books, puts it this way:

“Programming as a profession is only moderately interesting. It can be a good job, but you could make about the same money and be happier running a fast food joint. You’re much better off using code as your secret weapon in another profession.

People who can code in the world of technology companies are a dime a dozen and get no respect. People who can code in biology, medicine, government, sociology, physics, history, and mathematics are respected and can do amazing things to advance those disciplines.

Note that he doesn’t have many romantic notions about programming as a profession. However, programming is something bigger than just a job: it’s an essential, game-changing skill.

Code, don’t tell

“Show, don’t tell” is how my high school journalism teacher taught us how to write. Instead of telling the reader something:

James Smith is one of the toughest football players on the team.

– show it, through observed evidence:

When the halftime whistle blew, James Smith walked to the sidelines and collapsed. He later was told that the neck pain he played with through the second quarter was caused by a fracture in his neck.

I guess “Code, don’t tell” doesn’t really make sense; it’s just my made-up-way of saying that we have fantastically more ways than ever to tell – blog posts, retweets, status updates, auto-aggregations and other forms of repurposing – but we’re little better equipped to find and develop the actual stories. Programming is a skill that cuts through the noise, allows for the analysis and reporting on new substantive information sources, and even provides a way to create innovative story-telling forms (i.e. the web developer’s role).

So to follow my high school teacher’s advice, here’s an overview of my two most successful journalism projects so far, both done at ProPublica. As I explain later, both more or less originated from me sitting on my couch, being annoyed by what I saw as a lack of transparency. The first one, SOPA Opera, was initially self-published and probably could have been done entirely from the couch. The other, Dollars for Docs, was a full out effort by my colleagues and me. But it was programming-driven at every phase.

SOPA Opera

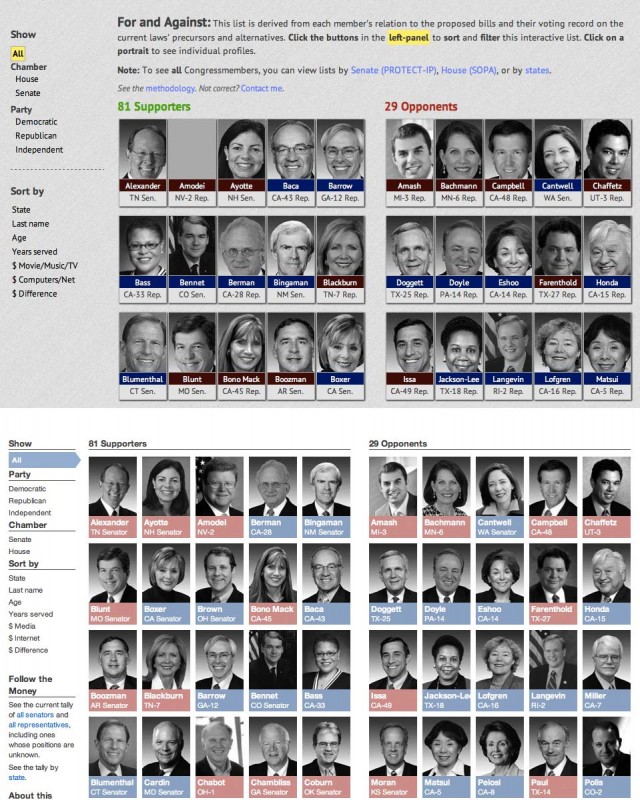

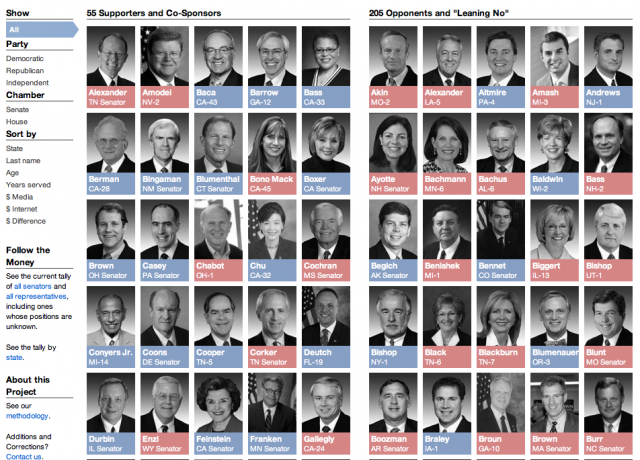

I won’t rehash the debate over this now-dead Internet regulation law, but the inspiration for the SOPA Opera news app was simply: I had read plenty of debate about SOPA for months. But when I wanted to see just which legislators actually supported it and their reasons for doing so, there wasn’t yet a great resource for that.

If you know about the official legislative site, THOMAS and are familiar with its navigation, you could at least find the list of sponsors. But good luck trawling the Congressional committee sites to find transcripts and testimony related to the law. It goes without saying that a list of opponents doesn’t exist and is beyond the official scope of THOMAS anyway.

So SOPA Opera, boiled down, is an pretty pedantic concept: “Hey, here’s a list of Congressmembers and what I’ve found out so far about their positions on SOPA.“

In other words, changing this:

SOPA sponsors, on THOMAS

To this:

The gist of SOPA Opera could be done without any programming whatsoever. You could even build a static in Photoshop and upload the image onto the Internet. So what role did programming play in this? It made it very easy to gather the already-available information, which included: the official list of sponsors, the boilerplate biographical and district information on every Congressmember (including their mug shots), and contribution data from the Center for Responsive Politics.

No exaggeration: a decent programmer could build a nice site from this data in about half-an-hour. The jazzy part of the site – the dynamic sorting of the list – was already built and offered as a free plugin to use (courtesy David DeSandro). It’s entirely possible to create SOPA Opera by hand, given a few days and an infinite amount of patience.

So programming allowed me to save my time and energy for the actual reporting. I thought about building a scraper to go through each Congressmember’s Facebook and Twitter page to search for the term “SOPA” But until the blackout, most lawmakers had nothing to say on the topic. So at first, my “research” largely involved typing “SOPA [some congressmember’s name]” into Google News and usually finding nothing.

When the SOPA issue blew up during the Jan. 18 blackout, I didn’t have to do any searching, as Congressmembers pretty much rushed to make known their opposition to SOPA. SOPA Opera was designed in a way to make it easy for constituents to look up their representative and, if I had no information about him/her, tell me what they found out after talking to their representative. Or, in a few cases, Congressional staffers contacted me directly.

SOPA Opera easily broke the single-day traffic record at ProPublica. This was mostly due to blackout-participating sites like Craigslist that directed their traffic to us as a reference. Clearly, what caused the seismic shift on the SOPA debate were the mega-sites that coordinated the millions of emails and phone calls to lawmakers, and SOPA Opera was an indirect beneficiary of the increased public interest.

But I believe that SOPA Opera made at least one important contribution to the debate: it made very clear the level and characteristics of support enjoyed by SOPA. One thing that the THOMAS listing of sponsors fails to do is note the political parties of the lawmakers. I felt that this was a critical piece and it was easy to get and display. The result: many visitors to SOPA Opera who had believed that SOPA was a diabolical scheme by [whatever-party-they-oppose] were shocked at how SOPA’s support was so bi-partisan and broad.

I heard from a number of people who had been highly energized about the anti-SOPA debate yet were completely shocked that Sen. Al Franken – automatically assumed to be on the side of “Internet freedom” – was in fact, was a sponsor of SOPA’s counterpart in the Senate. This was no state secret: Sen. Franken has been passionate and outspoken in his support and was one of the few who didn’t back away after political support collapsed post-blackout.

SOPA Opera’s success probably owes less to my skill than to the dismal state of accessibility in our legislative process. This goes to show that even when you arrive extremely late to the game, it’s possible to make a significant impact by simply having an idea of how things can be better. This applies in just about any situation and profession. Programming just makes it much easier to push your creation forward.

Some technical details on self-publishing

I don’t want to dwell too much on the web-side of things, as that is just one specific use of programming. But to get back on the topic of how someone can position themselves for a web-related media job, SOPA Opera is a really excellent example of the potential in self-publishing.

SOPA Opera spent about a week on a domain (sopaopera.org) that I purchased for $10. It didn’t bear the ProPublica brand then, and I didn’t have time to promote it beyond a few tweets and submitting it to Hacker News and Reddit.

But in just a week, sopaopera.org had racked up about 150,000 pageviews before we migrated it to ProPublica:

That’s not a huge number in itself, and traffic to it increased exponentially under ProPublica’s umbrella. But it had gained enough notice that prominent were linking to it. The problem I had at the start: Googling a random lawmaker’s name and the term “SOPA” and finding absolutely nothing – was solved, as Google highly-indexed all of the auto-generated lawmaker pages on SOPA Opera. At least one Congressmember’s office emailed me to update his page.

Not bad for a holiday break project and $10, using free resources and tools that are available to anyone with a computer. Back when I applied for newspaper jobs, I had to carry a portfolio of cut-out newspaper articles to show editors that at least a few people (my college paper and the one newspaper I interned at) had been willing to waste trees and ink on me. You were out of luck if all you had were a bunch of links to blog posts.

The mindset is different today, articles published on a traditional publication’s website, or at an online-only organization like Huffington Post, can count as legit clips. But I’d like to think that showing a full-blown website that includes not only traditional reporting content, but examples of how visual and interface design can tell a new story, as well as being able to provide concrete metrics (pageviews, referring links) of impact, would be even more impressive to today’s news editors.

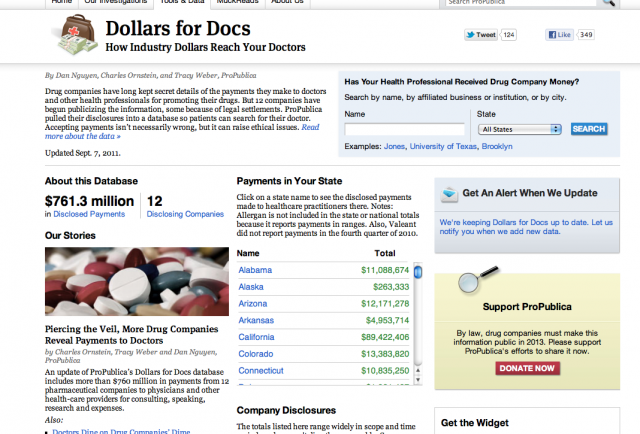

Dollars for Docs

Like SOPA Opera, Dollars for Docs (aka “D4D”) is late to its respective topic. It is a long accepted practice for medical companies to pay doctors to promote their products, not much different from a notable athlete who endorses a shoe that she considers to be the best for her sport. But in recent years, lawmakers and regulators have called for more transparency of these financial ties to prevent cases in which a doctors are unduly influenced by their benefactors.

Data on company-to-doctor payments is at least two decades old: Minnesota enacted a law in 1993 requiring companies to disclose their payments. However, that “data”, which came in the form of paper records that had to be hand-entered into a computer – after, of course, you visited the records’ actual storage location and photocopied each page at 25 cents a pop. For that reason, the records were collected but unexamined for at least a decade until Dr. Joseph Ross (now at Yale University) and the Public Citizen advocacy group collected and analyzed the records.

In 2007, they published their findings in the Journal of the American Medical Association, with the conclusion that the payment records were “compromised by incomplete disclosure as well as insufficient access.â€:

- In Vermont (which enacted a public disclosure law in 2001), most disclosures were redacted for “trade secrets” reasons. Of the publicly disclosed payments, 75% of them lacked information identifying the recipient

- In Minnesota, many of the companies had years in which they reported nothing.

- The “public” disclosures were pretty much inaccessible to the public. Dr. Ross and Public Citizen had to go to court to get the Vermont records.

Dr. Ross told me that after his study was published, not only was it apparent that the public was in the dark, but doctors themselves had no idea that the data were even being collected. The Minnesota pharmacy board was subsequently so swamped by requests from other researchers, hospitals and litigants that it began publishing the disclosures online.

At around the same time, the New York Times published its own investigation using the Minnesota records. Their analyzed both the company disclosures and Medicaid payments to Minnesota psychiatrists and found that during a time period in which company payments to Minnesota psychiatrists increased “compromised by incomplete disclosure as well as insufficient access.â€, antipsychotic drug prescriptions for children jumped more than 900 percent.

The Times investigation (also in 2007) sparked a large political fight in which U.S. Senate investigators targeted prominent psychiatrists whose work had expanded the use of antipsychotic drugs as treatment for children.

The end result of this was a proposed federal law to mandate these payment disclosures nationwide. This law was later folded into the 2010 health care reform package. By 2013, the federal government will publish a database of these disclosures.

Couch database

The idea for D4D was sparked from something I wrote for my blog one evening. I was writing some programming tutorials to show journalists, well, how programming could be used for everyday reporting, and I needed a current example. I came across this Times article, Pfizer Gives Details on Payments to Doctors, which reported that Pfizer was fulfilling the terms of a legal settlement by publishing a searchable database of the health professionals it paid. At that point, it was the fourth such drug company that had disclosed its payments in advance of the 2013 law – most of the others had done so also as part of settling their own lawsuits.

At that point, I knew virtually nothing about the issue. But what I did see was that Pfizer’s site seemed unnecessarily cumbersome. Though the disclosures were mandated, Pfizer’s site made it difficult to do simple analyses such as finding the professionals who had received substantial amounts or even the sum of the database’s payments. So I wrote a scraper and published the code and data for others to use.

Data-scraping and pharmaceutical payments isn’t a high-traffic topic, but the blog post caught the eyes of a few important people. My colleagues Charles Ornstein and Tracy Weber, both Pulitzer Prize health reporters, were well-informed about the issue but didn’t know how feasible it would be to do a broad analysis of the data. I think I would eventually have written a scraper and published the delimited data for every drug company that had so far been required to disclose (this article in the Times, Data on Fees to Doctors Is Called Hard to Parse, was a particular inspiration), but Charlie and Tracy knew how to turn it into a strong investigation.

Another side-benefit of self publishing: A reporter at PBS had also been working to collect and parse the data. He noticed My Pfizer post, though, and rather than being competitors, PBS teamed up with ProPublica in conducting the investigation.

Done stories are never dead. They aren’t even done.

Even with the groundbreaking work already done by Dr. Ross, Public Citizen and the Times in 2007, the subsequent Senate investigations, and the impending official database in 2013, there was still room for D4D to become a valuable, innovative investigation. It had considerable impact on the debate, prompting companies and medical institutions to change their disclosure and conflict-of-interest policies. D4D is currently is the most-viewed resource at ProPublica.

You can read our series coverage here.

The most obvious way that D4D differed from previous investigations is that it looked at the available nationwide set, not just Minnesota’s. Some of the data-driven angles we took included:

- Cross-referencing our payments database against all the state medical licensing databases to see if any of the highly-paid doctors had serious disciplinary issues. Prescription data is not publicly available, so this was an alternative way of scrutinizing companies’ assertions that they paid doctors for their prestige, and not their prescribing habits. It essentially involved writing a scraper for each state website.

- Cross-referencing our payments database against the faculty list at various medical schools to see if there were discrepancies in what the doctors disclosed to their institutions.

- Examining the differences between the quarterly reports, to see if payment levels dropped and why. Since the disclosures are largely unaudited, collecting and normalizing the data for comparison is the only way to double-check the completeness of the reports

- Much of D4D’s success came in our willingness to share our data with our reporting partners, and then later, to any newsroom that asked. This spawned hundreds of independently reported stories.

So even on a well-trodden story, there are still countless angles when you combine a keen reporter’s instinct with an ability to collect data. A prevalent theme in the journalism industry – especially in the age of Twitter – is the drive to be first. I understand that it’s important in a ratings-sweep context, and it certainly gets the blood going when you’re competing for a story, but I’ve never thought that it was good for journalism or particularly useful to news consumers.

This is why I enjoy data-driven journalism. On any given topic, there are so many valid and substantial ways to cross-examine the evidence behind a story and produce meaningful stories. And as time passes, the analysis only becomes more interesting, not less, because more and more data is added to the picture. Data-driven journalism can be done through simple use of Excel. But programming, as I explain in the next section, can vastly increase the opportunities and depth of these analyses.

A sidenote: The hardest part of D4D was the logistics, which were only manageable through programming. It’s too boring to go into detail, but D4D required a collaborative reporting process more disciplined than “just send that Word.doc as an attachment.” I don’t forsee a project of D4D’s scale being attempted by many other organizations, because of the difficulty in managing all the moving parts.

Never attribute to malice that which…

The most interesting reaction I got from D4D came not from doctors, but from researchers, compliance officers, and even federal investigators whose jobs it was to monitor these disclosures. They were thrilled that D4D made it so easy for them to check up on things. What was surprising to me was that I just assumed that everyone who had a professional stake in overseeing these disclosures had already collected the data themselves. The scraping-and-collecting of the company reports was by far the easiest part of D4D, and even if you couldn’t program you could at least hack together a system of copying-and-pasting from the various data-sources, if such information was vital to your job.

The truth is that the concept of “user interface” is as critical to an investigation as it is in separating successful tech startups from their clunky, failed competitors. I occasionally get asked for advice by researchers on their own projects. What stalls a surprising number of interesting investigative projects and analyses is not something as malicious as a shady CEO or the threat of a lawsuit, but problems as benign as: a company over the years has hundreds of datafiles, all zipped and scattered across many webpages. Is it possible to somehow download them all, unzip them, and put them into a database (or Excel) just so I can just find if someone’s name is in there?. Or: If I could only fix the few places where the agency screwed up in outputting this comma-delimited data file, I could analyze it in Excel.

If you can’t program, this isn’t a trivial problem: working with ten such files is an inconvenience. When there are 100 files, then the momentum for an inquiry might just stop dead, especially if the inquiry arises from curiosity instead of certainty (think of how many great stories and investigations have come out of such casual inquiries). However, with some basic programming, the difference between organizing 10 files and 10,000 files is a matter of milliseconds. A programmer thus has the power to not only work with already-normalized datasets and produce interesting stories, but he/she can (efficiently) create datasets that otherwise would have never been examined.

To reiterate: there are an astonishing number of stories and inquiries that are derailed by what are trivial technical issues to any half-competent programmer. This is both alarming from a civic perspective, yet extremely exciting if you’re someone with the right skills at the right time, as you’ll never want for ideas.

A practical road to programming

OK enough abstract talk. The amazing promise of programming is that there are so many opportunities. This leads to its biggest problem when trying to learn it: there are too many places to start.

This section contains some advice. It may not be the best advice for everyone, but at least everything I mention below is absolutely free to use and to learn from.

The Basics

If you haven’t already, create a Twitter account. Stop kvetching about how “no one wants to read what I ate for breakfast” because that casually implies that people would want to read your 100,000 word opus, as soon as you finish it. They won’t.

But having a Twitter account provides one avenue to spread your work and just as importantly, a channel to learn from people who aren’t just tweeting about their breakfasts.

Get a Dropbox. Get used to putting stuff on the cloud. Not your sensitive documents, but things like e-reference books and datasets and code. This is much better than emailing (and in some ways, more secure) things to yourself.

Create a Google account. Even if you don’t use it for email, Google Documents is extremely useful. And you may find use from the other parts of Google’s ecosystem

Get a second or third browser: If you’re paranoid about Twitter/Facebook/Google cookies tracking you, then use one browser to handle those accounts and another browser to do all your other web-browsing.

Data stuff

If you don’t have Excel, you can download OpenOffice’s capable suite. That said, Google Docs is probably the easiest way to get into keeping spreadsheets, with the added bonus of being in the cloud and thus easy to do collaborations and to use your programming with. Again, be cautious about putting very sensitive data there. But I’d argue that the cloud is still safer than keeping everything on a stealable-Macbook.

Google Refine: this was a project formerly known as Gridworks. It runs in the browser, Unlike Google Docs, you don’t need a Google Account or to be online to use it. It’s similar to a spreadsheet except that you won’t use it to calculate an average/sum of a column or to make charts. It’s for cleaning data, to quickly determine that “John F. Kennedy” — “Jack F. Kennedy”, “John Fitzgerald Kennedy” and “J.F. Kennedy” are all the same person. There have been some investigative data-work that would not have been possible without this tool. Check out the video introduction here; I’ve also written a tutorial at ProPublica.

Given the number of important stories that basically boil down to finding someone’s name several times in a database, it’s a little amazing to me that every serious reporter hasn’t at least tried Google Refine.

Programming

Don’t get stalled by trying to figure out which is the best language. The three most current popular: Ruby, Python and JavaScript, will serve your needs well, and you’ll find it relatively easy to pick up the other two after learning one.

That said, there’s one main big difference: Ruby and Python are more general purpose scripting languages. You can use them to sort your files, process (and build) a database, and even build a full out website (you may have heard of Ruby on Rails and Django).

JavaScript is most typically used for web interactivity in the browser, everything from animating buttons to full-fledged applications. Because it’s in every browser, it takes no work to try it out and to produce interactive bits. It takes a little more work to setup JS to do things like web-scraping or local file processing.

JS has an additional advantage in that there many interactive tutorials that you can access through your browser. Codecademy is one of the best known ones.

Programming resources

Zed Shaw’s “How to Learn Python the Hard Way” is one of the most popular beginnner-level (and free) ebooks. There is also a Ruby version.

A little self-promotion: for people who best learn through practical projects, I’ve been working on my own Ruby beginner’s guide, tentatively titled the Bastards Book of Ruby. It’s a work in progress but you’ll find some ideas on starter projects to work towards (a good start is writing a script to download and store all your tweets).

HTML

Don’t learn HTML. That is, don’t take a course in HTML. Learn enough to know that the HTML behind a webpage is just plain text. And learn enough to understand how:

<a target="_blank" href="http://en.wikipedia.org/wiki/HTML">Wikipedia's entry on HTML</a>

Creates a link that takes you to Wikipedia in a new window, like this: Wikipedia’s entry on HTML

That’s basically enough to get the concept of HTML (and the idea of meta-information) and to begin scraping webpages. One of the fastest ways to learn as you go is to get acquainted with your web browser’s inspector.

People to learn from

I’m not a particularly inspiring example of a journo-coder: I took up computer engineering because I was afraid there wouldn’t be many journalism jobs so I kind of half-stumbled into combining reporting with code because it’s easy to learn programming fundamentals during college. This is why if you’re a college student now, I strongly advise you to pick up programming at a time when learning is your main job in life.

Much more impressive to me are people who were doing well in their day jobs but decided to pick up programming in their spare hours – and then returned to do their day jobs with newfound inspiration and possibilities.

John Keefe (WNYC) – About a year-and-a-half ago, I remember John coming to Hacks/Hackers events to watch people code and to continually apologize for having to ask what he thought were dumb questions. In an incredibly short time, Keefe learned enough hacking to produce some great, creative apps and now heads WNYC’s data team, and also leads the discussion among news orgs on how to modernize the way we do things like election coverage.

Zach Sims – Sims is a co-founder of Codecademy. He was a poli-sci major who ventured into tech entrepreneurship but was frustrated that his lack of technical skills hindered his work. He learned programming on his own and with a co-founder, created Codecademy to teach others how to program. Codecademy itself is one of the hottest recent startups.

Neil Saunders – I stumbled across Neil Saunders’ blog while looking for R + Ruby examples. His blog is titled “What You’re Doing is Rather Desperate“, inspired by the reaction of a colleague who was apparently unimpressed with his use of programming in his bioscience job.

It’s a misconception that scientifically-minded professionals also know how to program. In fact, some don’t even have basic computer skills. Saunders not only publishes his code, but shows how others in his field can greatly improve their research with programming skills.

Kaitlyn Trigger –

As this TechCrunch article puts it, Kaitlyn Trigger was a poly sci major who “never took any computer classes.” She has been together with Instagram co-founder Mike Krieger but had been frustrated that she didn’t understand his work. So she picked up/downloaded Learn Python the Hard Way, learned the Python-based web framework Django, and created Lovestagram as a Valentine Day’s present.

It’s not just a cute story – learning Python and Django and making something within 2 months in your spare time is a pretty incredible achievement. It’s an awesome example of how having a project in mind can really help you learn code.

Matt Waite – was an award winning newspaper reporter before becoming a web developer. He went on, as a web developer, to win the most prestigious of journalism prizes: a Pulitzer for PolitiFact. He now teaches at University of Nebraska Lincoln and keeps a blog related to his work with journalism students.